之前介绍了使用kubeadm部署Kubernetes,现在我们使用二进制安装Kubernetes(k8s) v1.27.4。

基础系统环境和containerd的配置与使用kubeadm安装一致,可以参看使用kubeadm在龙蜥(Anolis) OS 8上部署Kubernetes,这里直接使用配置好的5台主机:

k8s-master01 ➜ ~ cat > /etc/hosts <<EOF 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.69.10.31 k8s-master01 10.69.10.32 k8s-master02 10.69.10.33 k8s-master03 10.69.10.34 k8s-node01 10.69.10.35 k8s-node02 10.69.10.36 lb-vip #规划分配的虚IP EOF

k8s与etcd下载及安装

下载安装k8s、etcd文件

仅在master01操作

k8s-master01 ➜ ~ wget https://dl.k8s.io/v1.27.4/kubernetes-server-linux-amd64.tar.gz

k8s-master01 ➜ ~ tar -xf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

k8s-master01 ➜ ~ wget https://github.com/etcd-io/etcd/releases/download/v3.5.9/etcd-v3.5.9-linux-amd64.tar.gz

tar -xf etcd*.tar.gz && mv etcd-*/etcd /usr/local/bin/ && mv etcd-*/etcdctl /usr/local/bin/

k8s-master01 ➜ ~ kubelet --version

Kubernetes v1.27.4

k8s-master01 ➜ ~ etcdctl version

etcdctl version: 3.5.9

API version: 3.5

将文件发送至其他节点

Master='k8s-master02 k8s-master03'

Work='k8s-node01 k8s-node02'

# 拷贝master组件

for NODE in $Master; do echo $NODE; scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin/; scp /usr/local/bin/etcd* $NODE:/usr/local/bin/; done

# 拷贝work组件

for NODE in $Work; do scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/ ; done

# 所有节点执行

mkdir -p /opt/cni/bin

生成证书

下载CloudFlare开源证书管理工具cfssl

k8s-master01 ➜ ~ wget "https://ghproxy.com/https://github.com/cloudflare/cfssl/releases/download/v1.6.4/cfssl_1.6.4_linux_amd64" -O /usr/local/bin/cfssl k8s-master01 ➜ ~ wget "https://ghproxy.com/https://github.com/cloudflare/cfssl/releases/download/v1.6.4/cfssljson_1.6.4_linux_amd64" -O /usr/local/bin/cfssljson k8s-master01 ➜ ~ chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson

在master01节点生成etcd证书

生成etcd CA:

k8s-master01 ➜ ~ cat > etcd-ca-csr.json << EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

k8s-master01 ➜ mkdir -p /etc/etcd/ssl

k8s-master01 ➜ cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

k8s-master01 ➜ ll /etc/etcd/ssl/

total 12K

drwxr-xr-x 2 root root 67 Aug 7 21:03 .

drwxr-xr-x 3 root root 17 Aug 7 20:48 ..

-rw-r--r-- 1 root root 1.1K Aug 7 21:03 etcd-ca.csr

-rw------- 1 root root 1.7K Aug 7 21:03 etcd-ca-key.pem

-rw-r--r-- 1 root root 1.3K Aug 7 21:03 etcd-ca.pem

生成etcd证书:

k8s-master01 ➜ cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

mkdir /etc/etcd/ssl -p

k8s-master01 ➜ cat > etcd-csr.json << EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

EOF

k8s-master01 ➜ cfssl gencert \

-ca=/etc/etcd/ssl/etcd-ca.pem \

-ca-key=/etc/etcd/ssl/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,k8s-master01,k8s-master02,k8s-master03,10.69.10.31,10.69.10.32,10.69.10.33,fd82:2013:0:1::31,fd82:2013:0:1::32,fd82:2013:0:1::33,::1 \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd

将Etcd证书复制到其他节点:

Master='k8s-master02 k8s-master03'

for NODE in $Master; do ssh $NODE "mkdir -p /etc/etcd/ssl"; for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do scp /etc/etcd/ssl/${FILE} $NODE:/etc/etcd/ssl/${FILE}; done; done

在master01节点生成k8s集群证书

所有证书存放目录

k8s-master01 ➜ mkdir -p /etc/kubernetes/pki

生成根证书

k8s-master01 ➜ cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

k8s-master01 ➜ cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

生成apiserver证书

10.96.0.1是service网段的第一个地址,10.69.10.36为高可用vip地址。fd82::是IPv6地址,没有IPv6可删除 :

k8s-master01 ➜ cat > apiserver-csr.json << EOF

{

"CN": "kube-apiserver",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

]

}

EOF

k8s-master01 ➜ cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-hostname=10.96.0.1,10.69.10.36,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,s1.k8s.webmaster.me,s2.k8s.webmaster.me,s3.k8s.webmaster.me,s4.k8s.webmaster.me,s5.k8s.webmaster.me,apiserver.k8s.webmaster.me,10.69.10.31,10.69.10.32,10.69.10.33,10.69.10.34,10.69.10.35,10.69.10.36,10.69.10.37,10.69.10.38,10.69.10.39,fd82:2013:0:1::31,fd82:2013:0:1::32,fd82:2013:0:1::33,fd82:2013:0:1::34,fd82:2013:0:1::35,fd82:2013:0:1::36,fd82:2013:0:1::37,fd82:2013:0:1::38,fd82:2013:0:1::39,fd82:2013:0:1::40,::1 \

-profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

生成apiserver聚合证书

k8s-master01 ➜ cat > front-proxy-ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"ca": {

"expiry": "876000h"

}

}

EOF

k8s-master01 ➜ cat > front-proxy-client-csr.json << EOF

{

"CN": "front-proxy-client",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF

k8s-master01 ➜ cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

k8s-master01 ➜ cfssl gencert \

-ca=/etc/kubernetes/pki/front-proxy-ca.pem \

-ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem \

-config=ca-config.json \

-profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

# 此处有一个警告,可以忽略

生成controller-manage的证书

k8s-master01 ➜ cat > manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "Kubernetes-manual"

}

]

}

EOF

k8s-master01 ➜ cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

2023/08/08 14:41:03 [INFO] generate received request

2023/08/08 14:41:03 [INFO] received CSR

2023/08/08 14:41:03 [INFO] generating key: rsa-2048

2023/08/08 14:41:03 [INFO] encoded CSR

2023/08/08 14:41:03 [INFO] signed certificate with serial number 648599378407428848563643923459757490745898166295

2023/08/08 14:41:03 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

设置集群项

k8s-master01 ➜ kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://10.69.10.36:9443 \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

Cluster "kubernetes" set.

# 设置上下文

k8s-master01 ➜ kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

Context "system:kube-controller-manager@kubernetes" created.

#设置用户项

k8s-master01 ➜ kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/controller-manager.pem \

--client-key=/etc/kubernetes/pki/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

User "system:kube-controller-manager" set.

#设置默认环境

k8s-master01 ➜ kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

Switched to context "system:kube-controller-manager@kubernetes".

生成scheduler证书

k8s-master01 ➜ cat > scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "Kubernetes-manual"

}

]

}

EOF

k8s-master01 ➜ cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

2023/08/08 14:56:22 [INFO] generate received request

2023/08/08 14:56:22 [INFO] received CSR

2023/08/08 14:56:22 [INFO] generating key: rsa-2048

2023/08/08 14:56:22 [INFO] encoded CSR

2023/08/08 14:56:22 [INFO] signed certificate with serial number 275856789624972026461098745964707820685935005676

2023/08/08 14:56:22 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

设置集群项

k8s-master01 ➜ kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://10.69.10.36:9443 \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Cluster "kubernetes" set.

k8s-master01 ➜ kubectl config set-credentials system:kube-scheduler \

--client-certificate=/etc/kubernetes/pki/scheduler.pem \

--client-key=/etc/kubernetes/pki/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

User "system:kube-scheduler" set.

k8s-master01 ➜ kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Context "system:kube-scheduler@kubernetes" created.

k8s-master01 ➜ kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Switched to context "system:kube-scheduler@kubernetes".

生成admin证书

k8s-master01 ➜ cat > admin-csr.json << EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "Kubernetes-manual"

}

]

}

EOF

k8s-master01 ➜ cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

2023/08/08 15:02:27 [INFO] generate received request

2023/08/08 15:02:27 [INFO] received CSR

2023/08/08 15:02:27 [INFO] generating key: rsa-2048

2023/08/08 15:02:27 [INFO] encoded CSR

2023/08/08 15:02:27 [INFO] signed certificate with serial number 599163309096743852908050259591931132239411947877

2023/08/08 15:02:27 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

设置集群项

k8s-master01 ➜ kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=https://10.69.10.36:9443 \ --kubeconfig=/etc/kubernetes/admin.kubeconfig Cluster "kubernetes" set. k8s-master01 ➜ kubectl config set-credentials kubernetes-admin \ --client-certificate=/etc/kubernetes/pki/admin.pem \ --client-key=/etc/kubernetes/pki/admin-key.pem \ --embed-certs=true \ --kubeconfig=/etc/kubernetes/admin.kubeconfig User "kubernetes-admin" set. k8s-master01 ➜ kubectl config set-context kubernetes-admin@kubernetes \ --cluster=kubernetes \ --user=kubernetes-admin \ --kubeconfig=/etc/kubernetes/admin.kubeconfig Context "kubernetes-admin@kubernetes" created. k8s-master01 ➜ kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=/etc/kubernetes/admin.kubeconfig Switched to context "kubernetes-admin@kubernetes".

创建kube-proxy证书

k8s-master01 ➜ cat > kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-proxy",

"OU": "Kubernetes-manual"

}

]

}

EOF

k8s-master01 ➜ cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-proxy-csr.json | cfssljson -bare /etc/kubernetes/pki/kube-proxy

2023/08/08 15:08:23 [INFO] generate received request

2023/08/08 15:08:23 [INFO] received CSR

2023/08/08 15:08:23 [INFO] generating key: rsa-2048

2023/08/08 15:08:23 [INFO] encoded CSR

2023/08/08 15:08:23 [INFO] signed certificate with serial number 232531878463289736054702372626782366131589497755

2023/08/08 15:08:23 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

设置集群项

k8s-master01 ➜ kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/pki/ca.pem \ --embed-certs=true \ --server=https://10.69.10.36:9443 \ --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig Cluster "kubernetes" set. k8s-master01 ➜ kubectl config set-credentials kube-proxy \ --client-certificate=/etc/kubernetes/pki/kube-proxy.pem \ --client-key=/etc/kubernetes/pki/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig User "kube-proxy" set. k8s-master01 ➜ kubectl config set-context kube-proxy@kubernetes \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig Context "kube-proxy@kubernetes" created. k8s-master01 ➜ kubectl config use-context kube-proxy@kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig Switched to context "kube-proxy@kubernetes".

创建ServiceAccount Key

k8s-master01 ➜ openssl genrsa -out /etc/kubernetes/pki/sa.key 2048 Generating RSA private key, 2048 bit long modulus (2 primes) ..................+++++ ...+++++ e is 65537 (0x010001) k8s-master01 ➜ openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub writing RSA key

将证书发送到其他master节点

Master='k8s-master02 k8s-master03'

for NODE in $Master; do

ssh $NODE "mkdir -p /etc/kubernetes/pki/";

for FILE in $(ls /etc/kubernetes/pki | grep -v etcd); do

scp /etc/kubernetes/pki/${FILE} $NODE:/etc/kubernetes/pki/${FILE};

done;

for FILE in admin.kubeconfig controller-manager.kubeconfig scheduler.kubeconfig; do

scp /etc/kubernetes/${FILE} $NODE:/etc/kubernetes/${FILE};

done;

done

查看证书

总计生成了26个证书文件

k8s-master01 ➜ ~ ll /etc/kubernetes/pki/ total 108K drwxr-xr-x 2 root root 4.0K Aug 8 15:24 . drwxr-xr-x 3 root root 106 Aug 8 15:24 .. -rw-r--r-- 1 root root 1.1K Aug 8 15:24 admin.csr -rw------- 1 root root 1.7K Aug 8 15:24 admin-key.pem -rw-r--r-- 1 root root 1.5K Aug 8 15:24 admin.pem -rw-r--r-- 1 root root 1.8K Aug 8 15:24 apiserver.csr -rw------- 1 root root 1.7K Aug 8 15:24 apiserver-key.pem -rw-r--r-- 1 root root 2.2K Aug 8 15:24 apiserver.pem -rw-r--r-- 1 root root 1.1K Aug 8 15:24 ca.csr -rw------- 1 root root 1.7K Aug 8 15:24 ca-key.pem -rw-r--r-- 1 root root 1.4K Aug 8 15:24 ca.pem -rw-r--r-- 1 root root 1.1K Aug 8 15:24 controller-manager.csr -rw------- 1 root root 1.7K Aug 8 15:24 controller-manager-key.pem -rw-r--r-- 1 root root 1.5K Aug 8 15:24 controller-manager.pem -rw-r--r-- 1 root root 940 Aug 8 15:24 front-proxy-ca.csr -rw------- 1 root root 1.7K Aug 8 15:24 front-proxy-ca-key.pem -rw-r--r-- 1 root root 1.1K Aug 8 15:24 front-proxy-ca.pem -rw-r--r-- 1 root root 903 Aug 8 15:24 front-proxy-client.csr -rw------- 1 root root 1.7K Aug 8 15:24 front-proxy-client-key.pem -rw-r--r-- 1 root root 1.2K Aug 8 15:24 front-proxy-client.pem -rw-r--r-- 1 root root 1.1K Aug 8 15:24 kube-proxy.csr -rw------- 1 root root 1.7K Aug 8 15:24 kube-proxy-key.pem -rw-r--r-- 1 root root 1.5K Aug 8 15:24 kube-proxy.pem -rw------- 1 root root 1.7K Aug 8 15:24 sa.key -rw-r--r-- 1 root root 451 Aug 8 15:24 sa.pub -rw-r--r-- 1 root root 1.1K Aug 8 15:24 scheduler.csr -rw------- 1 root root 1.7K Aug 8 15:24 scheduler-key.pem -rw-r--r-- 1 root root 1.5K Aug 8 15:24 scheduler.pem k8s-master01 ➜ ~ ls /etc/kubernetes/pki/ |wc -l 26

k8s系统组件配置

etcd配置

master01配置

cat > /etc/etcd/etcd.config.yml << EOF name: 'k8s-master01' data-dir: /var/lib/etcd wal-dir: /var/lib/etcd/wal snapshot-count: 5000 heartbeat-interval: 100 election-timeout: 1000 quota-backend-bytes: 0 listen-peer-urls: 'https://10.69.10.31:2380' listen-client-urls: 'https://10.69.10.31:2379,http://127.0.0.1:2379' max-snapshots: 3 max-wals: 5 cors: initial-advertise-peer-urls: 'https://10.69.10.31:2380' advertise-client-urls: 'https://10.69.10.31:2379' discovery: discovery-fallback: 'proxy' discovery-proxy: discovery-srv: initial-cluster: 'k8s-master01=https://10.69.10.31:2380,k8s-master02=https://10.69.10.32:2380,k8s-master03=https://10.69.10.33:2380' initial-cluster-token: 'etcd-k8s-cluster' initial-cluster-state: 'new' strict-reconfig-check: false enable-v2: true enable-pprof: true proxy: 'off' proxy-failure-wait: 5000 proxy-refresh-interval: 30000 proxy-dial-timeout: 1000 proxy-write-timeout: 5000 proxy-read-timeout: 0 client-transport-security: cert-file: '/etc/kubernetes/pki/etcd/etcd.pem' key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem' client-cert-auth: true trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem' auto-tls: true peer-transport-security: cert-file: '/etc/kubernetes/pki/etcd/etcd.pem' key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem' peer-client-cert-auth: true trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem' auto-tls: true debug: false log-package-levels: log-outputs: [default] force-new-cluster: false EOF

master02配置

cat > /etc/etcd/etcd.config.yml << EOF name: 'k8s-master02' data-dir: /var/lib/etcd wal-dir: /var/lib/etcd/wal snapshot-count: 5000 heartbeat-interval: 100 election-timeout: 1000 quota-backend-bytes: 0 listen-peer-urls: 'https://10.69.10.32:2380' listen-client-urls: 'https://10.69.10.32:2379,http://127.0.0.1:2379' max-snapshots: 3 max-wals: 5 cors: initial-advertise-peer-urls: 'https://10.69.10.32:2380' advertise-client-urls: 'https://10.69.10.32:2379' discovery: discovery-fallback: 'proxy' discovery-proxy: discovery-srv: initial-cluster: 'k8s-master01=https://10.69.10.31:2380,k8s-master02=https://10.69.10.32:2380,k8s-master03=https://10.69.10.33:2380' initial-cluster-token: 'etcd-k8s-cluster' initial-cluster-state: 'new' strict-reconfig-check: false enable-v2: true enable-pprof: true proxy: 'off' proxy-failure-wait: 5000 proxy-refresh-interval: 30000 proxy-dial-timeout: 1000 proxy-write-timeout: 5000 proxy-read-timeout: 0 client-transport-security: cert-file: '/etc/kubernetes/pki/etcd/etcd.pem' key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem' client-cert-auth: true trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem' auto-tls: true peer-transport-security: cert-file: '/etc/kubernetes/pki/etcd/etcd.pem' key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem' peer-client-cert-auth: true trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem' auto-tls: true debug: false log-package-levels: log-outputs: [default] force-new-cluster: false EOF

master03配置

cat > /etc/etcd/etcd.config.yml << EOF name: 'k8s-master03' data-dir: /var/lib/etcd wal-dir: /var/lib/etcd/wal snapshot-count: 5000 heartbeat-interval: 100 election-timeout: 1000 quota-backend-bytes: 0 listen-peer-urls: 'https://10.69.10.33:2380' listen-client-urls: 'https://10.69.10.33:2379,http://127.0.0.1:2379' max-snapshots: 3 max-wals: 5 cors: initial-advertise-peer-urls: 'https://10.69.10.33:2380' advertise-client-urls: 'https://10.69.10.33:2379' discovery: discovery-fallback: 'proxy' discovery-proxy: discovery-srv: initial-cluster: 'k8s-master01=https://10.69.10.31:2380,k8s-master02=https://10.69.10.32:2380,k8s-master03=https://10.69.10.33:2380' initial-cluster-token: 'etcd-k8s-cluster' initial-cluster-state: 'new' strict-reconfig-check: false enable-v2: true enable-pprof: true proxy: 'off' proxy-failure-wait: 5000 proxy-refresh-interval: 30000 proxy-dial-timeout: 1000 proxy-write-timeout: 5000 proxy-read-timeout: 0 client-transport-security: cert-file: '/etc/kubernetes/pki/etcd/etcd.pem' key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem' client-cert-auth: true trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem' auto-tls: true peer-transport-security: cert-file: '/etc/kubernetes/pki/etcd/etcd.pem' key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem' peer-client-cert-auth: true trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem' auto-tls: true debug: false log-package-levels: log-outputs: [default] force-new-cluster: false EOF

创建系统的service

以下在所有master节点操作

创建etcd.service

cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Service Documentation=https://coreos.com/etcd/docs/latest/ After=network.target [Service] Type=notify ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml Restart=on-failure RestartSec=10 LimitNOFILE=65536 [Install] WantedBy=multi-user.target Alias=etcd3.service EOF

连接etcd证书并启动服务

mkdir /etc/kubernetes/pki/etcd ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/ systemctl daemon-reload systemctl enable --now etcd

查看etcd状态

k8s-master01 ➜ export ETCDCTL_API=3 k8s-master01 ➜ etcdctl --endpoints="10.69.10.33:2379,10.69.10.32:2379,10.69.10.31:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status --write-out=table +------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | 10.69.10.33:2379 | b94a51895c30f1d8 | 3.5.9 | 20 kB | false | false | 2 | 9 | 9 | | | 10.69.10.32:2379 | df26027ebcf77ffe | 3.5.9 | 20 kB | false | false | 2 | 9 | 9 | | | 10.69.10.31:2379 | 91783f6bc6a30ab8 | 3.5.9 | 20 kB | true | false | 2 | 9 | 9 | | +------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

配置负载均衡

安装keepalived和haproxy(3台master节点都执行)

yum -y install keepalived haproxy

配置haproxy

修改haproxy配置文件(3台master节点都执行)

mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.backup cat >/etc/haproxy/haproxy.cfg<<"EOF" global maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err stats timeout 30s defaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s frontend monitor-in bind *:33305 mode http option httplog monitor-uri /monitor frontend k8s-master bind 0.0.0.0:9443 bind 127.0.0.1:9443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-master backend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server k8s-master01 10.69.10.31:6443 check server k8s-master02 10.69.10.32:6443 check server k8s-master03 10.69.10.33:6443 check EOF

配置keepalived节点

Master01配置keepalived master节点

k8s-master01 ➜ mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.backup

k8s-master01 ➜ cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

# 注意网卡名

interface enp1s0

mcast_src_ip 10.69.10.31

virtual_router_id 51

priority 100

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.69.10.36

}

track_script {

chk_apiserver

} }

EOF

Master02配置keepalived backup节点

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

# 注意网卡名

interface enp1s0

mcast_src_ip 10.69.10.32

virtual_router_id 51

priority 80

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.69.10.36

}

track_script {

chk_apiserver

} }

EOF

Master03配置keepalived backup节点

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

# 注意网卡名

interface enp1s0

mcast_src_ip 10.69.10.33

virtual_router_id 51

priority 50

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.69.10.36

}

track_script {

chk_apiserver

} }

EOF

健康检查脚本

健康检查脚本配置(3台master节点都执行)

cat > /etc/keepalived/check_apiserver.sh << EOF

#!/bin/bash

err=0

for k in \$(seq 1 3)

do

check_code=\$(pgrep haproxy)

if [[ \$check_code == "" ]]; then

err=\$(expr \$err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ \$err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

chmod +x /etc/keepalived/check_apiserver.sh

启动haproxy/keepalived服务

systemctl daemon-reload systemctl enable --now haproxy systemctl enable --now keepalived

测试高可用

k8s-master03 ➜ ~ ping 10.69.10.36 PING 10.69.10.36 (10.69.10.36) 56(84) bytes of data. 64 bytes from 10.69.10.36: icmp_seq=1 ttl=64 time=0.546 ms 64 bytes from 10.69.10.36: icmp_seq=2 ttl=64 time=0.487 ms 64 bytes from 10.69.10.36: icmp_seq=3 ttl=64 time=0.383 ms 64 bytes from 10.69.10.36: icmp_seq=4 ttl=64 time=0.426 ms ^C --- 10.69.10.36 ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 3112ms rtt min/avg/max/mdev = 0.383/0.460/0.546/0.065 ms k8s-master03 ➜ ~ telnet 10.69.10.36 9443 Trying 10.69.10.36... Connected to 10.69.10.36. Escape character is '^]'. Connection closed by foreign host.

k8s组件配置

所有k8s节点创建以下目录

mkdir -p /etc/kubernetes/manifests/ /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes

创建apiserver

master01节点配置

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--advertise-address=10.69.10.31 \

--service-cluster-ip-range=10.96.0.0/12,fd00::/108 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://10.69.10.31:2379,https://10.69.10.32:2379,https://10.69.10.33:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User \

--enable-aggregator-routing=true

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOF

master02节点配置

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--advertise-address=10.69.10.32 \

--service-cluster-ip-range=10.96.0.0/12,fd00::/108 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://10.69.10.31:2379,https://10.69.10.32:2379,https://10.69.10.33:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User \

--enable-aggregator-routing=true

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOF

master03节点配置

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--advertise-address=10.69.10.33 \

--service-cluster-ip-range=10.96.0.0/12,fd00::/108 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://10.69.10.31:2379,https://10.69.10.32:2379,https://10.69.10.33:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User \

--enable-aggregator-routing=true

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOF

启动apiserver

在所有master节点执行:

systemctl daemon-reload systemctl enable --now kube-apiserver systemctl restart kube-apiserver systemctl status kube-apiserver

配置Controller-Manager service

创建系统service文件

所有master节点都用相同的配置,172.16.0.0/12为pod网段,按需求设置。

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--v=2 \

--bind-address=0.0.0.0 \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--leader-elect=true \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidrs=true \

--service-cluster-ip-range=10.96.0.0/12,fd00::/108 \

--cluster-cidr=172.16.0.0/12,fc00::/48 \

--node-cidr-mask-size-ipv4=24 \

--node-cidr-mask-size-ipv6=64 \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF

启动kube-controller-manager

启动并查看状态

systemctl daemon-reload systemctl enable --now kube-controller-manager systemctl restart kube-controller-manager systemctl status kube-controller-manager

配置kube-scheduler service

创建系统service文件

所有master节点配置,且配置相同

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--v=2 \

--bind-address=0.0.0.0 \

--leader-elect=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF

启动kube-scheduler

启动并查看服务状态

systemctl daemon-reload systemctl enable --now kube-scheduler systemctl restart kube-scheduler systemctl status kube-scheduler

TLS Bootstrapping配置

在master01上配置

kubectl config set-cluster kubernetes \ --certificate-authority=/etc/kubernetes/pki/ca.pem \ --embed-certs=true --server=https://10.69.10.36:9443 \ --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig kubectl config set-credentials tls-bootstrap-token-user \ --token=c8ad9c.2e4d610cf3e7426e \ --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig kubectl config set-context tls-bootstrap-token-user@kubernetes \ --cluster=kubernetes \ --user=tls-bootstrap-token-user \ --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig kubectl config use-context tls-bootstrap-token-user@kubernetes \ --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig mkdir -p /root/.kube ; cp /etc/kubernetes/admin.kubeconfig /root/.kube/config

查看集群状态

k8s-master01 ➜ kubectl get cs Warning: v1 ComponentStatus is deprecated in v1.19+ NAME STATUS MESSAGE ERROR controller-manager Healthy ok etcd-0 Healthy etcd-2 Healthy etcd-1 Healthy scheduler Healthy ok

创建bootstrap.secret

k8s-master01 ➜ cat > bootstrap.secret.yaml << EOF

apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-c8ad9c

namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:

description: "The default bootstrap token generated by 'kubelet '."

token-id: c8ad9c

token-secret: 2e4d610cf3e7426e

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubelet-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-certificate-rotation

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodes

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kube-apiserver

EOF

k8s-master01 ➜ kubectl create -f bootstrap.secret.yaml

secret/bootstrap-token-c8ad9c created

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-certificate-rotation created

clusterrole.rbac.authorization.k8s.io/system:kube-apiserver-to-kubelet created

clusterrolebinding.rbac.authorization.k8s.io/system:kube-apiserver created

node节点配置

在master01上将证书复制到node节点

cd /etc/kubernetes/

for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02; do ssh $NODE mkdir -p /etc/kubernetes/pki; for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig kube-proxy.kubeconfig; do scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}; done; done

kubelet配置

配置kubelet service

在所有节点配置:

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

ExecStart=/usr/local/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet-conf.yml \

--container-runtime-endpoint=unix:///run/containerd/containerd.sock \

--node-labels=node.kubernetes.io/node=

[Install]

WantedBy=multi-user.target

EOF

创建kubelet的配置文件

在所有节点配置:

cat > /etc/kubernetes/kubelet-conf.yml <<EOF

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

EOF

启动kubelet

systemctl daemon-reload systemctl enable --now kubelet systemctl restart kubelet systemctl status kubelet

kube-proxy配置

将kubeconfig发送至其他节点

for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02; do scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig; done

配置kube-proxy的service文件

所有k8s节点添加kube-proxy的service文件

cat > /usr/lib/systemd/system/kube-proxy.service << EOF [Unit] Description=Kubernetes Kube Proxy Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-proxy \ --config=/etc/kubernetes/kube-proxy.yaml \ --v=2 Restart=always RestartSec=10s [Install] WantedBy=multi-user.target EOF

添加kube-proxy的配置

所有k8s节点添加kube-proxy的配置

cat > /etc/kubernetes/kube-proxy.yaml << EOF apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 0.0.0.0 clientConnection: acceptContentTypes: "" burst: 10 contentType: application/vnd.kubernetes.protobuf kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig qps: 5 clusterCIDR: 172.16.0.0/12,fc00::/48 configSyncPeriod: 15m0s conntrack: max: null maxPerCore: 32768 min: 131072 tcpCloseWaitTimeout: 1h0m0s tcpEstablishedTimeout: 24h0m0s enableProfiling: false healthzBindAddress: 0.0.0.0:10256 hostnameOverride: "" iptables: masqueradeAll: false masqueradeBit: 14 minSyncPeriod: 0s syncPeriod: 30s ipvs: masqueradeAll: true minSyncPeriod: 5s scheduler: "rr" syncPeriod: 30s kind: KubeProxyConfiguration metricsBindAddress: 127.0.0.1:10249 mode: "ipvs" nodePortAddresses: null oomScoreAdj: -999 portRange: "" udpIdleTimeout: 250ms EOF

启动kube-proxy

systemctl daemon-reload systemctl restart kube-proxy systemctl enable --now kube-proxy systemctl status kube-proxy

安装网络插件

安装配置Calico

配置calico

下载calico部署文件

wget https://raw.githubusercontent.com/projectcalico/calico/master/manifests/calico-typha.yaml -O calico.yaml

更改calico网段,纯IPv4配置:

vim calico.yaml

# calico-config ConfigMap处

"ipam": {

"type": "calico-ipam",

},

- name: IP

value: "autodetect"

- name: CALICO_IPV4POOL_CIDR

value: "172.16.0.0/12"

IPv4+IPv6双栈配置:

vim calico.yaml

# calico-config ConfigMap处

"ipam": {

"type": "calico-ipam",

"assign_ipv4": "true",

"assign_ipv6": "true"

},

- name: IP

value: "autodetect"

- name: IP6

value: "autodetect"

- name: CALICO_IPV4POOL_CIDR

value: "172.16.0.0/12"

- name: CALICO_IPV6POOL_CIDR

value: "fc00::/48"

- name: FELIX_IPV6SUPPORT

value: "true"

国内服务器可使用国内的docker仓库:

sed -i "s#docker.io/calico/#m.daocloud.io/docker.io/calico/#g" calico.yaml

部署calico

kubectl apply -f calico.yaml

查看集群状态

k8s-master01 ➜ kubectl get node NAME STATUS ROLES AGE VERSION k8s-master01 Ready <none> 30m v1.27.4 k8s-master02 Ready <none> 30m v1.27.4 k8s-master03 Ready <none> 30m v1.27.4 k8s-node01 Ready <none> 30m v1.27.4 k8s-node02 Ready <none> 30m v1.27.4 k8s-master01 ➜ kubectl describe node | grep Runtime Container Runtime Version: containerd://1.7.2 Container Runtime Version: containerd://1.7.2 Container Runtime Version: containerd://1.7.2 Container Runtime Version: containerd://1.7.2 Container Runtime Version: containerd://1.7.2 k8s-master01 ➜ kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-8787c9999-wvcpf 1/1 Running 0 6m kube-system calico-node-5ctd6 1/1 Running 0 6m kube-system calico-node-rq27f 1/1 Running 0 6m kube-system calico-node-rwbmz 1/1 Running 0 6m kube-system calico-node-rzfpd 1/1 Running 0 6m kube-system calico-node-sdzkb 1/1 Running 0 6m kube-system calico-typha-76544b4c45-qnqmw 1/1 Running 0 6m

安装CoreDNS

以下步骤只在master01操作

安装Helm 3

k8s-master01 ➜ curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 k8s-master01 ➜ chmod 700 get_helm.sh k8s-master01 ➜ ./get_helm.sh Downloading https://get.helm.sh/helm-v3.12.2-linux-amd64.tar.gz Verifying checksum... Done. Preparing to install helm into /usr/local/bin helm installed into /usr/local/bin/helm k8s-master01 ➜ helm list NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

下载CoreDNS

k8s-master01 ➜ helm repo add coredns https://coredns.github.io/helm k8s-master01 ➜ helm pull coredns/coredns k8s-master01 ➜ tar xvf coredns-*.tgz

修改配置

修改IP地址

k8s-master01 ➜ cat coredns/values.yaml | grep clusterIP: clusterIP: "10.96.0.10"

国内服务器可使用国内的docker仓库:

sed -i "s#coredns/#m.daocloud.io/docker.io/coredns/#g" values.yaml sed -i "s#registry.k8s.io/#m.daocloud.io/registry.k8s.io/#g" values.yaml

部署coredns

helm install coredns ./coredns/ -n kube-system

检查dns服务

k8s-master01 ➜ kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-8787c9999-wvcpf 1/1 Running 0 10m calico-node-5ctd6 1/1 Running 0 10m calico-node-rq27f 1/1 Running 0 10m calico-node-rwbmz 1/1 Running 0 10m calico-node-rzfpd 1/1 Running 0 10m calico-node-sdzkb 1/1 Running 0 10m calico-typha-76544b4c45-qnqmw 1/1 Running 0 10m coredns-coredns-67f64d7dc9-gprsp 1/1 Running 0 118s

安装Metrics Server

以下步骤只在master01操作

下载配置Metrics-server

下载高可用版本,并修改关键性配置:

wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/high-availability.yaml

vim high-availability.yaml

---

# 1

defaultArgs:

- --cert-dir=/tmp

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

# 2

volumeMounts:

- mountPath: /tmp

name: tmp-dir

- name: ca-ssl

mountPath: /etc/kubernetes/pki

# 3

volumes:

- emptyDir: {}

name: tmp-dir

- name: ca-ssl

hostPath:

path: /etc/kubernetes/pki

---

国内服务器可使用国内的docker仓库:

sed -i "s#registry.k8s.io/#m.daocloud.io/registry.k8s.io/#g" *.yaml

部署Metrics-server

kubectl apply -f high-availability.yaml

查看节点资源

k8s-master01 ➜ kubectl top node NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% k8s-master01 171m 8% 1283Mi 68% k8s-master02 123m 6% 1172Mi 62% k8s-master03 145m 7% 1164Mi 62% k8s-node01 78m 3% 607Mi 32% k8s-node02 73m 3% 591Mi 31%

集群验证

部署pod资源

k8s-master01 ➜ cat<<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- name: busybox

image: docker.io/library/busybox:1.28

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Always

EOF

pod/busybox created

k8s-master01 ➜ kubectl get pod

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 0 35s

解析默认命名空间

用pod解析默认命名空间中的kubernetes

k8s-master01 ➜ kubectl exec busybox -n default -- nslookup kubernetes Server: 10.96.0.10 Address 1: 10.96.0.10 coredns-coredns.kube-system.svc.cluster.local Name: kubernetes Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

测试跨命名空间是否可以解析

k8s-master01 ➜ kubectl get svc -A NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 24h kube-system calico-typha ClusterIP 10.106.170.221 <none> 5473/TCP 4h33m kube-system coredns-coredns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 44m kube-system metrics-server ClusterIP 10.98.255.190 <none> 443/TCP 27m k8s-master01 ➜ k8s-master01 ➜ kubectl exec busybox -n default -- nslookup coredns-coredns.kube-system Server: 10.96.0.10 Address 1: 10.96.0.10 coredns-coredns.kube-system.svc.cluster.local Name: coredns-coredns.kube-system Address 1: 10.96.0.10 coredns-coredns.kube-system.svc.cluster.local

测试节点联通性

在其他节点上测试联通性,每个节点都必须要能访问Kubernetes的kubernetes svc 443和kube-dns的service 53

k8s-node01 ➜ ~ telnet 10.96.0.1 443 Trying 10.96.0.1... Connected to 10.96.0.1. Escape character is '^]'. ^] telnet> Connection closed. k8s-node01 ➜ ~ telnet 10.96.0.10 53 Trying 10.96.0.10... Connected to 10.96.0.10. Escape character is '^]'. Connection closed by foreign host. k8s-node01 ➜ ~ curl 10.96.0.10:53 curl: (52) Empty reply from server

Pod和Pod之前要能通

k8s-master01 ➜ kubectl get pod -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES busybox 1/1 Running 0 9m39s 172.25.92.66 k8s-master02 <none> <none> k8s-master01 ➜ k8s-master01 ➜ kubectl get pod -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-8787c9999-wvcpf 1/1 Running 0 4h39m 172.25.244.193 k8s-master01 <none> <none> calico-node-5ctd6 1/1 Running 0 4h39m 10.69.10.34 k8s-node01 <none> <none> calico-node-rq27f 1/1 Running 0 4h39m 10.69.10.33 k8s-master03 <none> <none> calico-node-rwbmz 1/1 Running 0 4h39m 10.69.10.35 k8s-node02 <none> <none> calico-node-rzfpd 1/1 Running 0 4h39m 10.69.10.31 k8s-master01 <none> <none> calico-node-sdzkb 1/1 Running 0 4h39m 10.69.10.32 k8s-master02 <none> <none> calico-typha-76544b4c45-qnqmw 1/1 Running 0 4h39m 10.69.10.31 k8s-master01 <none> <none> coredns-coredns-67f64d7dc9-gprsp 1/1 Running 0 50m 172.18.195.1 k8s-master03 <none> <none> metrics-server-7bf65989c5-bh6fv 1/1 Running 0 33m 172.27.14.193 k8s-node02 <none> <none> metrics-server-7bf65989c5-pdwmw 1/1 Running 0 33m 172.17.125.1 k8s-node01 <none> <none>

进入busybox ping其他节点上的pod

k8s-master01 ➜ kubectl exec -ti busybox -- sh / # ping 10.69.10.34 PING 10.69.10.34 (10.69.10.34): 56 data bytes 64 bytes from 10.69.10.34: seq=0 ttl=63 time=1.117 ms 64 bytes from 10.69.10.34: seq=1 ttl=63 time=0.687 ms 64 bytes from 10.69.10.34: seq=2 ttl=63 time=0.695 ms 64 bytes from 10.69.10.34: seq=3 ttl=63 time=0.415 ms ^C --- 10.69.10.34 ping statistics --- 4 packets transmitted, 4 packets received, 0% packet loss round-trip min/avg/max = 0.415/0.728/1.117 ms / #

多副本测试

创建三个副本,可以看到3个副本分布在不同的节点上

k8s-master01 ➜ cat > deployments.yaml << EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

EOF

k8s-master01 ➜ kubectl apply -f deployments.yaml

deployment.apps/nginx-deployment created

k8s-master01 ➜ kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox 1/1 Running 0 13m 172.25.92.66 k8s-master02 <none> <none>

nginx-deployment-55f598f8d-25qx9 1/1 Running 0 28s 172.17.125.2 k8s-node01 <none> <none>

nginx-deployment-55f598f8d-8kvmv 1/1 Running 0 28s 172.18.195.2 k8s-master03 <none> <none>

nginx-deployment-55f598f8d-dq224 1/1 Running 0 28s 172.27.14.194 k8s-node02 <none> <none>

# 测试完毕删除

k8s-master01 ➜ kubectl delete -f deployments.yaml

deployment.apps "nginx-deployment" deleted

ingress安装

配置和部署

k8s-master01 ➜ wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/deploy/static/provider/cloud/deploy.yaml k8s-master01 ➜ kubectl apply -f deploy.yaml namespace/ingress-nginx created serviceaccount/ingress-nginx created serviceaccount/ingress-nginx-admission created role.rbac.authorization.k8s.io/ingress-nginx created role.rbac.authorization.k8s.io/ingress-nginx-admission created clusterrole.rbac.authorization.k8s.io/ingress-nginx created clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created rolebinding.rbac.authorization.k8s.io/ingress-nginx created rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created configmap/ingress-nginx-controller created service/ingress-nginx-controller created service/ingress-nginx-controller-admission created deployment.apps/ingress-nginx-controller created job.batch/ingress-nginx-admission-create created job.batch/ingress-nginx-admission-patch created ingressclass.networking.k8s.io/nginx created validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

安装cert-manager

添加repo

k8s-master01 ➜ helm repo add jetstack https://charts.jetstack.io k8s-master01 ➜ helm repo update

helm安装v1.11版本

k8s-master01 ➜ helm install \ cert-manager jetstack/cert-manager \ --namespace cert-manager --create-namespace \ --set ingressShim.defaultIssuerName=letsencrypt-prod \ --set ingressShim.defaultIssuerKind=ClusterIssuer \ --set ingressShim.defaultIssuerGroup=cert-manager.io \ --set installCRDs=true \ --version v1.11.4 NAME: cert-manager LAST DEPLOYED: Fri Aug 11 00:29:57 2023 NAMESPACE: cert-manager STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: cert-manager v1.11.4 has been deployed successfully! In order to begin issuing certificates, you will need to set up a ClusterIssuer or Issuer resource (for example, by creating a 'letsencrypt-staging' issuer). More information on the different types of issuers and how to configure them can be found in our documentation: https://cert-manager.io/docs/configuration/ For information on how to configure cert-manager to automatically provision Certificates for Ingress resources, take a look at the `ingress-shim` documentation: https://cert-manager.io/docs/usage/ingress/

查看安装的cert-manager

k8s-master01 ➜ kubectl get crd | grep cert certificaterequests.cert-manager.io 2023-08-10T16:29:58Z certificates.cert-manager.io 2023-08-10T16:29:58Z challenges.acme.cert-manager.io 2023-08-10T16:29:58Z clusterissuers.cert-manager.io 2023-08-10T16:29:58Z issuers.cert-manager.io 2023-08-10T16:29:58Z orders.acme.cert-manager.io 2023-08-10T16:29:58Z k8s-master01 ➜ kubectl get po -n cert-manager NAME READY STATUS RESTARTS AGE cert-manager-549c8f4dfc-29dkh 1/1 Running 0 5m2s cert-manager-cainjector-7ffc56d8c5-xs7qv 1/1 Running 0 5m2s cert-manager-webhook-dcc6cd765-rf8cz 1/1 Running 0 5m2s

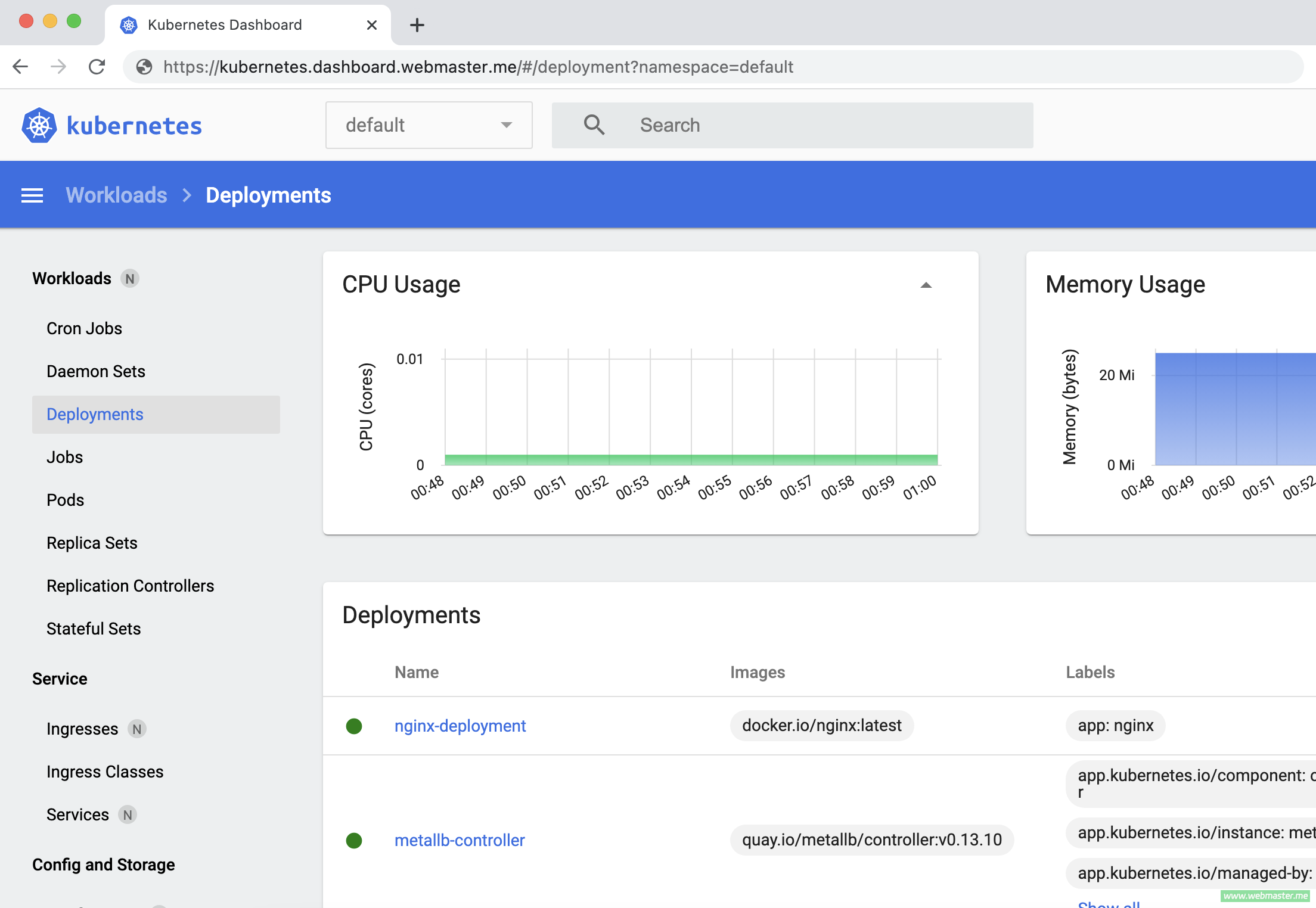

安装MetalLB

稍后安装的dashboard使用LoadBalancer模式,为了能正确获取EXTERNAL-IP,需要部署负载均衡服务。

添加repo

k8s-master01 ➜ helm repo add metallb https://metallb.github.io/metallb k8s-master01 ➜ helm install metallb metallb/metallb

MetalLB 有 Layer2 模式和 BGP 模式,因为 BGP 对路由器有要求,因此我们测试时使用 Layer2 模式。

创建 IPAdressPool

多个实例IP地址池可以共存,并且地址可以由CIDR定义, 按范围分配,并且可以分配IPV4和IPV6地址。

k8s-master01 ➜ cat <<EOF > IPAddressPool.yaml apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: name: first-pool namespace: default spec: addresses: # 可分配的 IP 地址,可以指定多个,包括 ipv4、ipv6 - 10.69.10.51-10.69.10.70 EOF k8s-master01 ➜ kubectl apply -f IPAddressPool.yaml ipaddresspool.metallb.io/first-pool created

创建 L2Advertisement

L2 模式不要求将 IP 绑定到网络接口 工作节点。它的工作原理是响应本地网络 arp 请求,以将计算机的 MAC 地址提供给客户端。如果不设置关联到 IPAdressPool,那默认 L2Advertisement 会关联上所有可用的 IPAdressPool。

创建 L2Advertisement,并关联 IPAdressPool:

k8s-master01 ➜ cat <<EOF > L2Advertisement.yaml apiVersion: metallb.io/v1beta1 kind: L2Advertisement metadata: name: advertisement.example namespace: default spec: ipAddressPools: - first-pool #上一步创建的 ip 地址池,通过名字进行关联 EOF k8s-master01 ➜ kubectl apply -f L2Advertisement.yaml l2advertisement.metallb.io/advertisement.example created

安装dashboard

添加repo

k8s-master01 ➜ helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/ k8s-master01 ➜ helm repo update

helm安装v3版本

k8s-master01 ➜ helm install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --create-namespace --namespace kubernetes-dashboard \

--set=app.ingress.hosts="{localhost,kubernetes.dashboard.webmaster.me}" \

--set=nginx.enabled="false" \

--set=cert-manager.enabled="false" \

--set=metrics-server.enabled="false"

NAME: kubernetes-dashboard

LAST DEPLOYED: Fri Aug 11 00:45:19 2023

NAMESPACE: kubernetes-dashboard

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

*************************************************************************************************

*** PLEASE BE PATIENT: Kubernetes Dashboard may need a few minutes to get up and become ready ***

*************************************************************************************************

Congratulations! You have just installed Kubernetes Dashboard in your cluster.

It looks like you already have nginx installed in your cluster. First find the namespace where it is installed and then find its main service name. By default, it should be located in namespace called nginx or nginx-ingress and service name should be nginx-controller.

To access Dashboard run (replace placeholders with actual names):

kubectl -n <nginx-namespace> port-forward svc/<nginx-service> 8443:443

Dashboard will be available at:

https://localhost:8443

Looks like you are deploying Kubernetes Dashboard on a custom domain(s).

Please make sure that the ingress configuration is valid.

Dashboard should be accessible on your configured domain(s) soon:

- https://kubernetes.dashboard.webmaster.me

NOTE: It may take a few minutes for the Ingress IP/Domain to be available.

It does not apply to local dev Kubernetes installations such as kind, etc.

You can watch the status using:

kubectl -n kubernetes-dashboard get ing kubernetes-dashboard -w

创建用户账号

k8s-master01 ➜ kubectl create serviceaccount kube-dashboard-admin-sa -n kubernetes-dashboard serviceaccount/kube-dashboard-admin-sa created k8s-master01 ➜ kubectl create clusterrolebinding kube-dashboard-admin-sa \ --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:kube-dashboard-admin-sa clusterrolebinding.rbac.authorization.k8s.io/kube-dashboard-admin-sa created k8s-master01 ➜ kubectl create token kube-dashboard-admin-sa -n kubernetes-dashboard --duration=87600h eyJhbGciOiJSUzI1NiIsImtpZCI6IncwY0JSRUVKbnZ5YWU3SU5PRkZTMTR5OHhzenMzXzRPSlJjNDVTdjllSzQifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoyMDA3MDQ5MDIxLCJpYXQiOjE2OTE2ODkwMjEsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJrdWJlLWRhc2hib2FyZC1hZG1pbi1zYSIsInVpZCI6IjVkZWM2ODFlLTdiZDEtNDAwMC04MThiLTQ3ZGRkZWZkNzA5NSJ9fSwibmJmIjoxNjkxNjg5MDIxLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6a3ViZS1kYXNoYm9hcmQtYWRtaW4tc2EifQ.1yXJS6jxhr6_ZbWZVV56vQx7c96plB3cddpmx5Vi7fvEhfe6ZU2D3PD8hwlVqktB26KR2qE1jgX8tIFQg-Qw5u7Wy8y6Z7crAosM6k_u6DjMqfV5cD_CoEtuUTsq_09T1t7-LO8neSmgHc7ViV96tbsCsfWtm1vYXXjBWmcw2AAZPibBLO14jpNj_nmNJlTZVYQaBKTA4TIdjaGe-Apof0WCRy4z4ORmipwJCAm9tmOm_YEt2j080P4sdIqDefvYJ14ZfkHT1_dIBSwxKm_BvxSwHP7c0n1kr-nk8CUqwm3PSW3rjo1msyj9iFDSFYKbh675_RoXMnWIKsMBp40Rtw

获取访问IP

查看ingress-nginx服务:

k8s-master01 ➜ kubectl -n ingress-nginx get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-nginx-controller LoadBalancer 10.111.164.184 10.69.10.51 80:30823/TCP,443:31214/TCP 66m ingress-nginx-controller-admission ClusterIP 10.98.126.47 <none> 443/TCP 66m

从服务列表里看到给ingress-nginx-controller自动分配的外部IP是10.69.10.51。

配置hosts

在宿主机或其他需要访问的主机配置hosts:

k8s-master01 ➜ cat >> /etc/hosts <<EOF 10.69.10.51 kubernetes.dashboard.webmaster.me EOF

访问dashboard

使用浏览器访问https://kubernetes.dashboard.webmaster.me,用上面生成的token登录控制面板:

文章内容主要参考abcdocker及其他网络文章,如有侵权请告知。

相关文章